Notation

Recall,

- Lower-case

- Upper-case

- Treating

- With independence,

- Each individual probability is computed (at least theoretically) with a PDF (probability density function)

Inverse

Suppose that we have a sample of data

- Since the prior probability

… we say that the posterior probability is proportional to the likelihood.

Likelihood

Let the likelihood function, in terms of a parameter

or

Suppose that we have data for how long a certain type and brand of light bulb operated (in the same working conditions), and that data in months was

- Build the likelihood function assuming an exponential distribution.

- Compute the likelihood that

- Compute the likelihood that

Log Likelihood

You know that logarithms make large numbers smaller. More precisely,

Example:

Did you know that logarithms make small numbers larger (in size). More precisely,

Example:

From pre-calculus, recall the properties of logarithms:

For modeling with the exponential distribution, we saw that the likelihood function was

We take the natural logarithm to compute the log-likelihood function

- Compute the log-likelihood that

- Compute the log-likelihood that

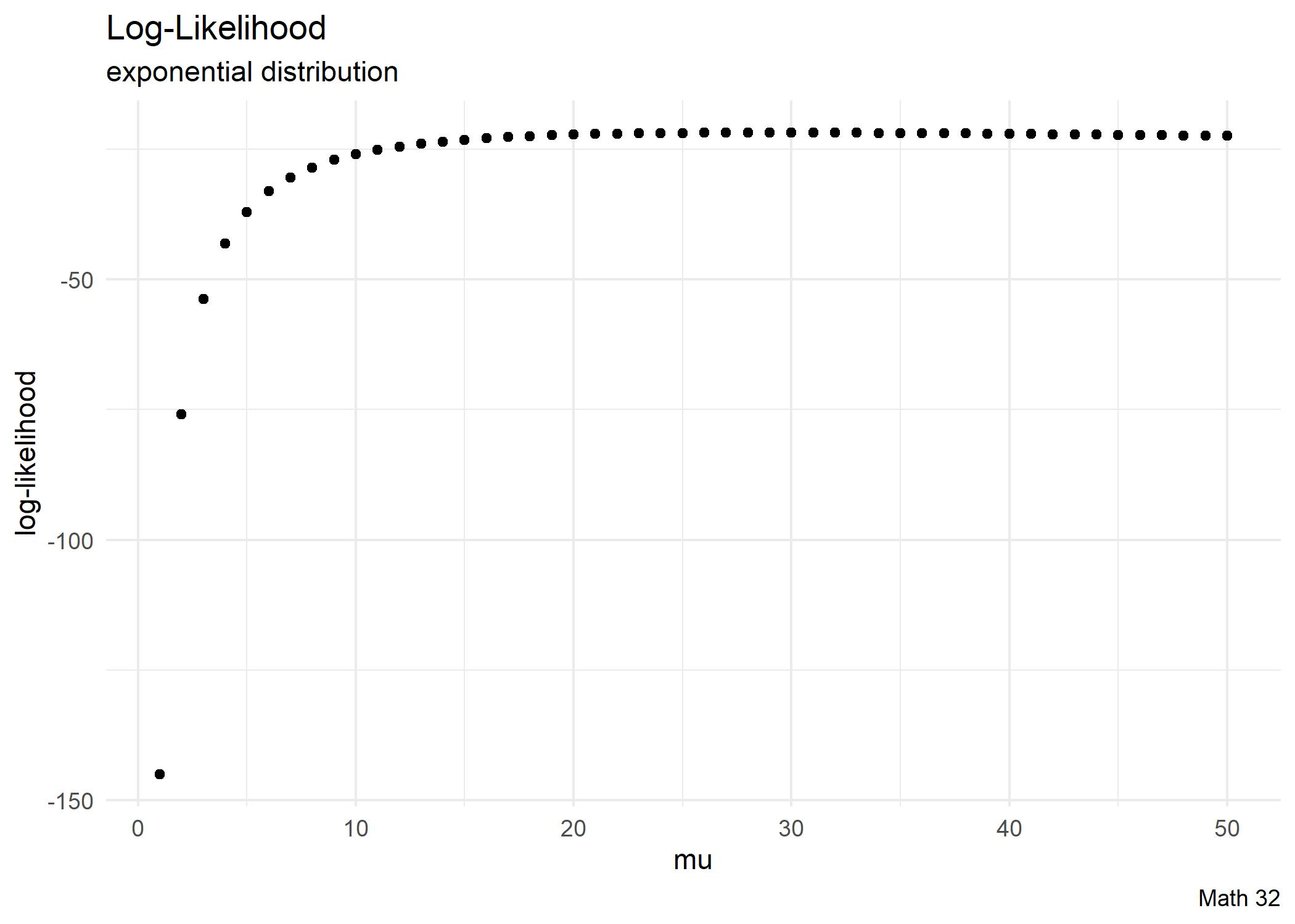

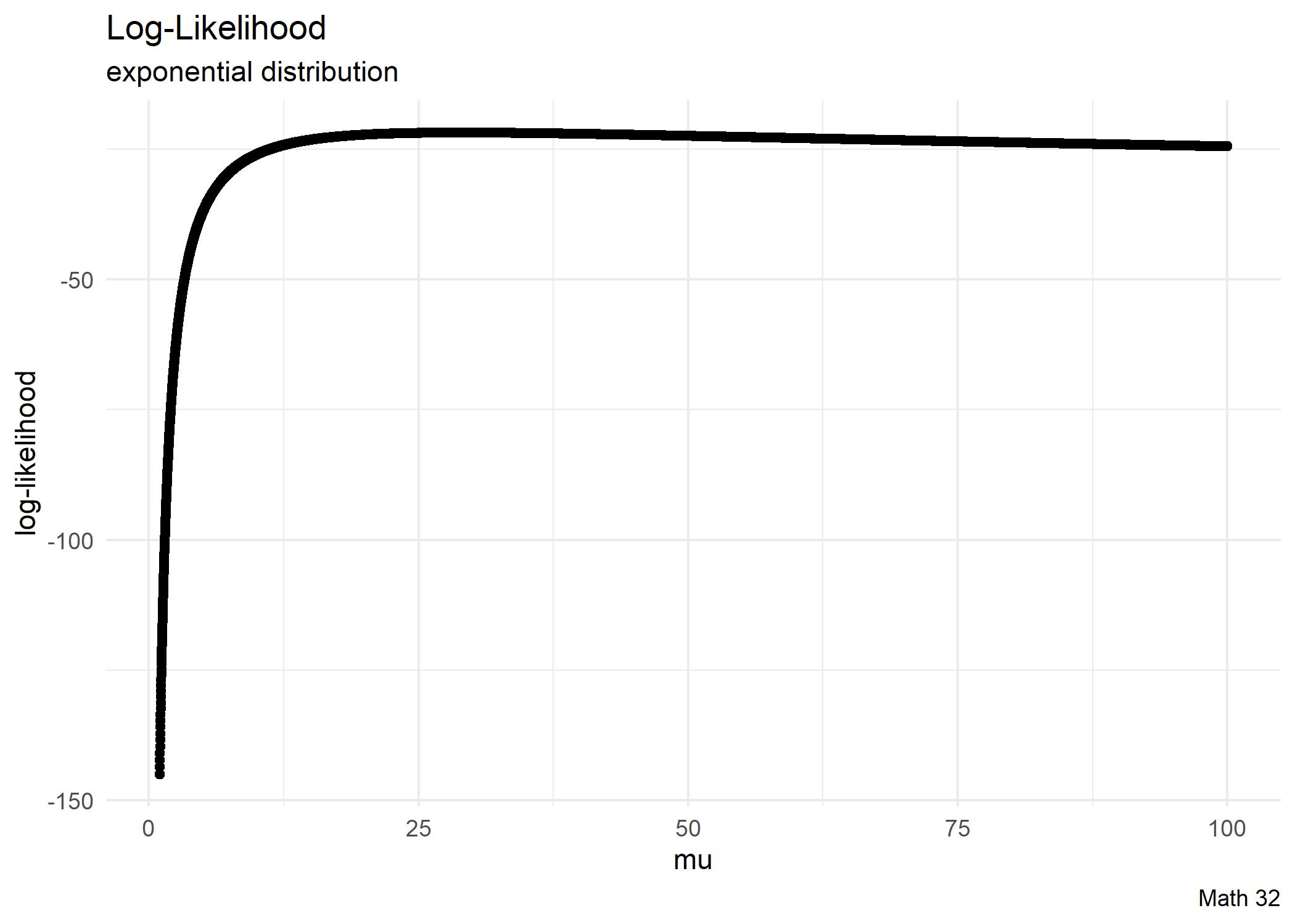

Visuals

Looking Ahead

WHW9

Exam 2, Mon., Apr. 10

- more information in weekly announcement