Today: Estimators

Goal: Explore generalization from samples to populations

Objectives: Show the biased or unbiased estimation via

- sample mean

- sample variance

- sample standard deviation

Demographics Example

From our Demographics Survey data of Math 32 students, suppose that the following is a sample of observations of heights (in inches):

- then

Suppose that the following is another sample of heights:

- then

Suppose that the following is another sample of heights:

- then

Observe: the sample mean (usually) changes upon a new set of observations

- Can we calculate the average height of UC Merced students?

- How can we calculate the average height of UC Merced students?

Thought: what if we take a mean of the sample means?

Estimators

Let

If we are trying to estimate a population parameter

Today, we will look at situations where

- mean

- variance

- standard deviation

Mean

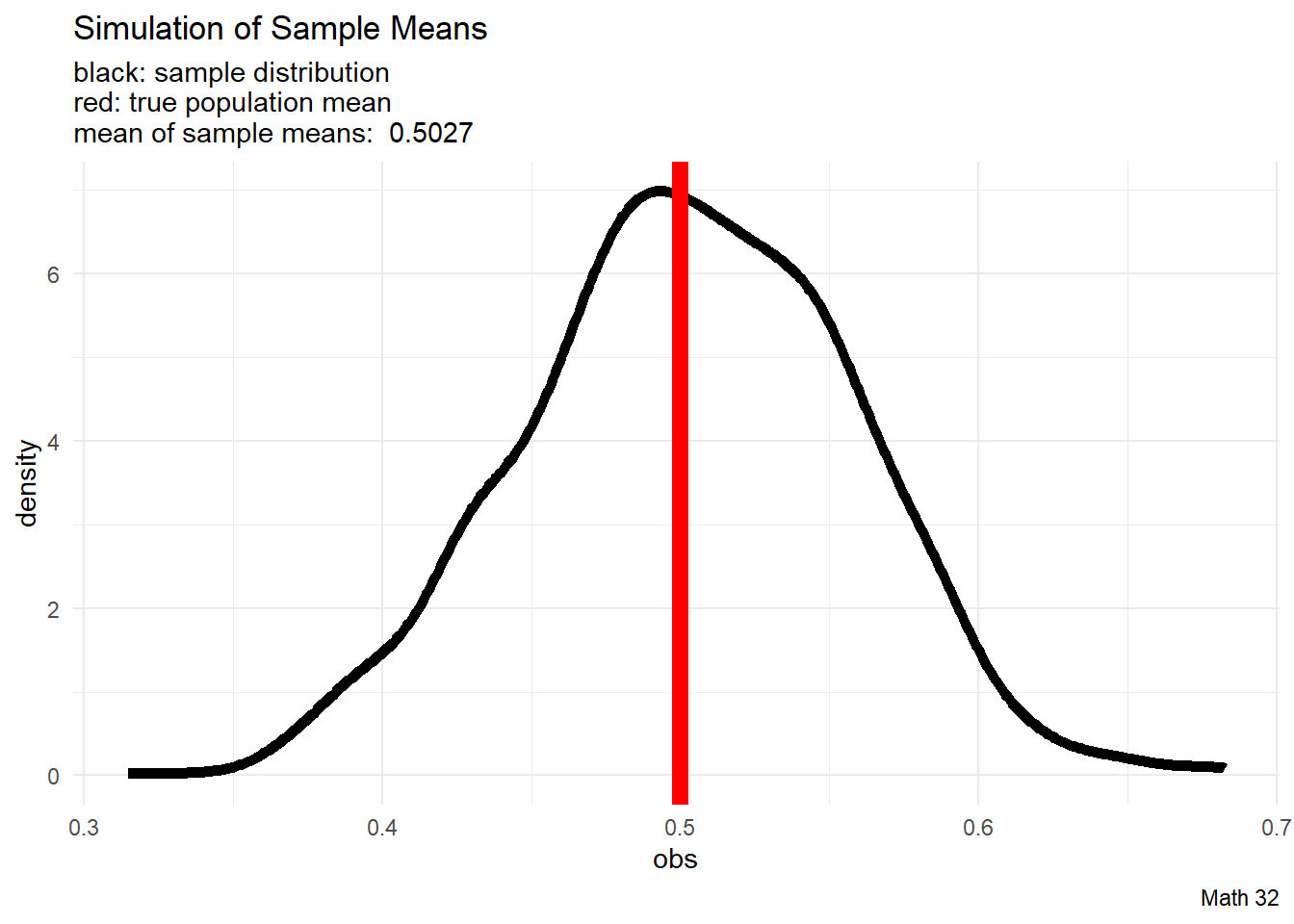

We will run simulations with

N <- 1337 # number of iterations

n <- 25 # sample size

# pre-allocate vector of space for observations

obs <- rep(NA, N)

# run simulation

for(i in 1:N){

these_numbers <- runif(n, 0, 1) # sample n numbers from U(0,1)

obs[i] <- mean(these_numbers) #record average

}

# mean of observations

mean_of_obs <- mean(obs)

# make data frame

df <- data.frame(obs)

# visualization

df |>

ggplot(aes(x = obs)) +

geom_density(color = "black", size = 2) +

geom_vline(xintercept = 1/2, color = "red", size = 3) +

labs(title = "Simulation Sample Mean",

subtitle = paste("black: sample distribution\nred: true population mean\nmean of sample means: ", round(mean_of_obs, 4)),

caption = "Math 32") +

theme_minimal()Warning: Using `size` aesthetic for lines was deprecated in ggplot2 3.4.0.

ℹ Please use `linewidth` instead.

Loosely speaking, since the sampling distribution “lines up” with the population mean, we say that the sample median is an unbiased estimator of the population mean.

Therefore

Population Variance

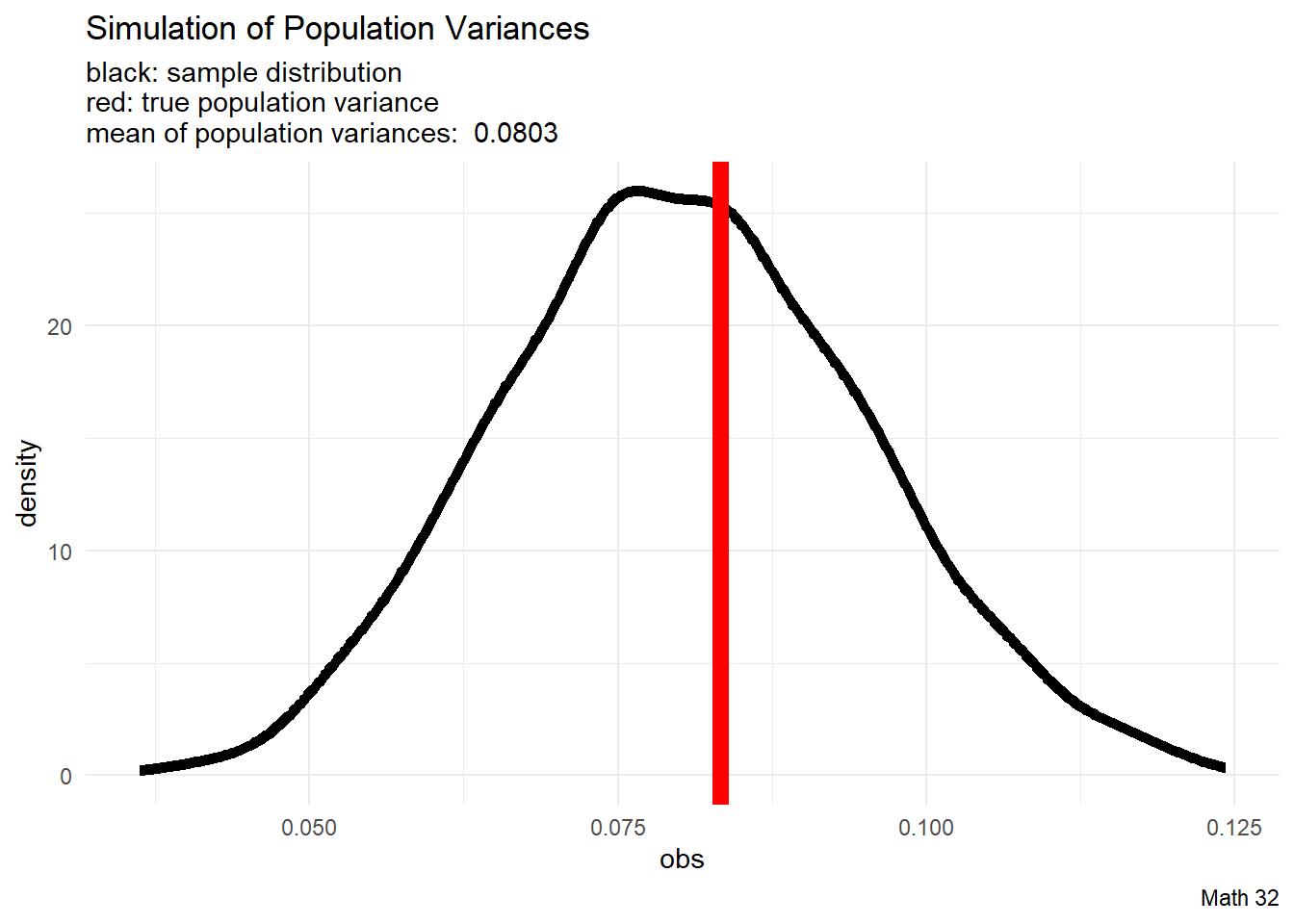

We will run simulations with

We will explore what happens if we apply the population variance formula

to samples.

# user-defined function

pop_var <- function(x){

N <- length(!is.na(x)) #population size

mu <- mean(x, na.rm = TRUE) #population mean

# return population mean (note use of "N")

sum( (x - mu)^2 ) / N

}

N <- 1337 # number of iterations

n <- 25 # sample size

# pre-allocate vector of space for observations

obs <- rep(NA, N)

# run simulation

for(i in 1:N){

these_numbers <- runif(n, 0, 1) # sample n numbers from U(0,1)

obs[i] <- pop_var(these_numbers) #record population variance

}

# mean of observations

mean_of_obs <- mean(obs)

# make data frame

df <- data.frame(obs)

# visualization

df |>

ggplot(aes(x = obs)) +

geom_density(color = "black", size = 2) +

geom_vline(xintercept = 1/12, color = "red", size = 3) +

labs(title = "Simulation of Population Variances",

subtitle = paste("black: sample distribution\nred: true population variance\nmean of population variances: ", round(mean_of_obs, 4)),

caption = "Math 32") +

theme_minimal()

Loosely speaking, since the sampling distribution tends to underestimate the population variance, we say that the population variance (with

Bessel’s Correction

Can we rescale the process for computing variance so that the operation is an unbiased estimator for the population variance?

Let

We will compute the value of

Lemma:

We have derived the formula for the sample variance

That is, the “

Sample Variance

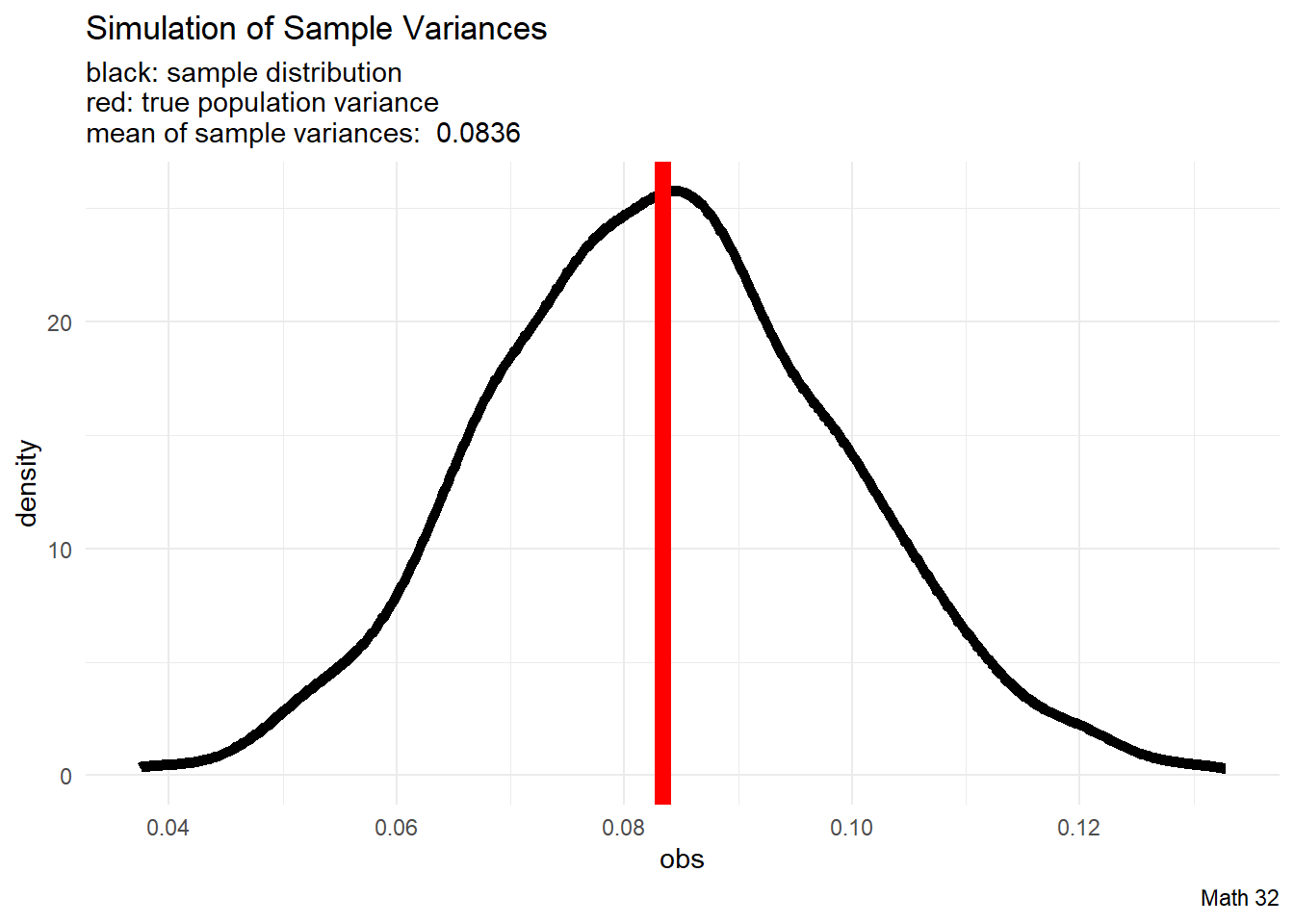

We will run simulations with

We will explore what happens if we apply the sample variance formula

to samples.

# user-defined function

samp_var <- function(x){

n <- length(!is.na(x)) #sample size

xbar <- mean(x, na.rm = TRUE) #sample mean

# return population mean (note use of "n-1")

sum( (x - xbar)^2 ) / (n-1)

}

N <- 1337 # number of iterations

n <- 25 # sample size

# pre-allocate vector of space for observations

obs <- rep(NA, N)

# run simulation

for(i in 1:N){

these_numbers <- runif(n, 0, 1) # sample n numbers from U(0,1)

obs[i] <- samp_var(these_numbers) #record sample variance

}

# mean of observations

mean_of_obs <- mean(obs)

# make data frame

df <- data.frame(obs)

# visualization

df |>

ggplot(aes(x = obs)) +

geom_density(color = "black", size = 2) +

geom_vline(xintercept = 1/12, color = "red", size = 3) +

labs(title = "Simulation of Sample Variances",

subtitle = paste("black: sample distribution\nred: true population variance\nmean of sample variances: ", round(mean_of_obs, 4)),

caption = "Math 32") +

theme_minimal()

Loosely speaking, since the sampling distribution “lines up” with the population variance, we say that the sample variance (with

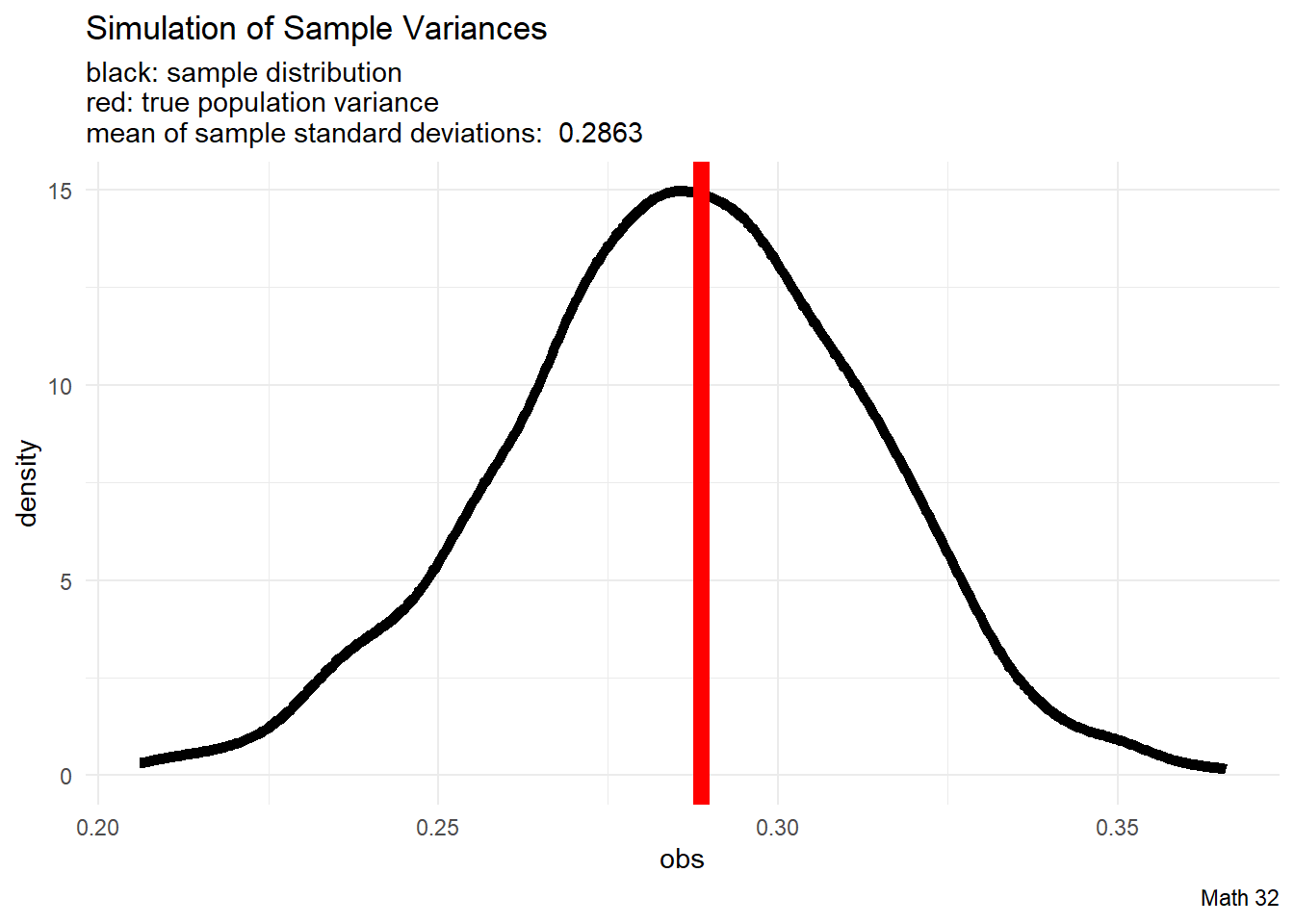

Sample Standard Deviation

We will run simulations with

We will explore what happens if we apply the sample variance formula

to samples.

# user-defined function

samp_var <- function(x){

n <- length(!is.na(x)) #sample size

xbar <- mean(x, na.rm = TRUE) #sample mean

# return population mean (note use of "n-1")

sum( (x - xbar)^2 ) / (n-1)

}

N <- 1337 # number of iterations

n <- 25 # sample size

# pre-allocate vector of space for observations

obs <- rep(NA, N)

# run simulation

for(i in 1:N){

these_numbers <- runif(n, 0, 1) # sample n numbers from U(0,1)

obs[i] <- sqrt(samp_var(these_numbers)) #record sample standard deviation

}

# mean of observations

mean_of_obs <- mean(obs)

# make data frame

df <- data.frame(obs)

# visualization

df |>

ggplot(aes(x = obs)) +

geom_density(color = "black", size = 2) +

geom_vline(xintercept = sqrt(1/12), color = "red", size = 3) +

labs(title = "Simulation of Sample Variances",

subtitle = paste("black: sample distribution\nred: true population variance\nmean of sample standard deviations: ", round(mean_of_obs, 4)),

caption = "Math 32") +

theme_minimal()

Let

If

However, by Jensen’s Inequality, since

and it follows that

However, in practice, the discrepancy is usually so small that it is ignored.

Looking Ahead

due Fri., Mar. 24:

- LHW8

no lecture on Mar. 24, Apr. 3

Exam 2 will be on Mon., Apr. 10